A digital arms race is underway as major technology platforms implement sophisticated filtering systems to counter the escalating tide of low-quality artificial intelligence content flooding online ecosystems. This proliferation of synthetic media—dubbed ‘AI slop’ by industry experts—encompasses everything from felines creating artwork to compromised celebrity depictions and animated characters promoting products.

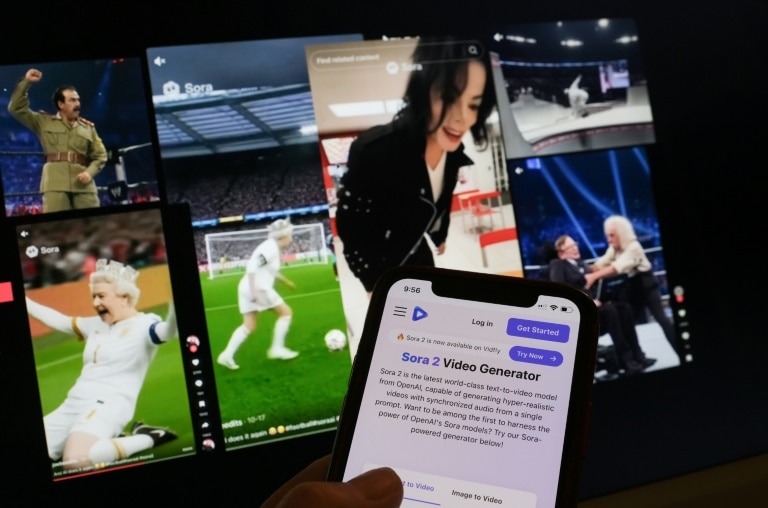

The emergence of advanced generative AI tools from industry leaders like Google’s Veo and OpenAI’s Sora has democratized the creation of hyper-realistic imagery through simple text prompts. This technological accessibility has resulted in an explosion of synthetic content that YouTube CEO Neal Mohan characterizes as raising ‘concerns about low-quality content’ that’s increasingly inundating social networks.

Swiss engineer Yves, who preferred anonymity, described the phenomenon to AFP as ‘cheap, bland and mass-produced’—sentiments echoing across social discussion platforms like Reddit. In response, platforms including Pinterest and TikTok have deployed user-activated filters that enable content consumers to selectively exclude AI-generated material from their feeds.

While Meta’s Instagram and Facebook offer more limited content reduction tools without explicit filtering options, YouTube has implemented a multi-faceted approach that includes enhanced labeling protocols. These developments represent an evolution from earlier industry efforts that primarily focused on video authentication to prevent misinformation.

Meanwhile, philosophical divisions are emerging within the tech industry regarding AI content’s fundamental value. Microsoft CEO Satya Nadella advocates moving beyond the ‘slop versus sophistication’ debate to embrace AI’s potential for amplifying human creativity and productivity. Conversely, content creator Bob Doyle suggests that ‘the criticism of AI slop is the criticism of some individual’s creative expression’ that may represent embryonic artistic concepts.

Smaller platforms are implementing more radical solutions. Music streaming service Coda Music, with approximately 2,500 users, enables complete blocking of AI content from suggested playlists and identifies accounts as ‘AI artists’ following community reporting and verification. Similarly, Cara—a social network for artists boasting over a million users—employs hybrid algorithmic and human moderation systems to maintain what founder Jingna Zhang describes as essential ‘human connection’ in creative expression.